If your not running Octopus, go out and get it today. An Octopus Deploy server has become as essential as a Build server in modern development departments these days. This post is about how I’ve been implementing them, which I’ve done in a few setups so far.

The “Source to Build” Setup is simple, I’m not going to go too much into that, if you cant get TeamCity talking to your source control you are probably in the wrong field.

TeamCity is the meat in this sandwich so this post focuses mainly on that.

The only out-of-the-ordinary thing that I ever did was TeamCity->VSO which requires you to generate the basic auth creds (https://www.visualstudio.com/en-us/integrate/get-started/auth/overview) for an account then put them into the TeamCity VCS root. the odd thing about this is that when connecting to TFS (On premise) the username format is DOMAIN\Username, but with VSO its ##LIVE##\Username

e.g. ##LIVE##\JohnDoe

Also on that note, i recommend using the “Assemblyinfo patcher” to get your version numebrs up to date.

And, the VCS Labeling, throw a label into TFVC for every build, the convention i normally use is “BuildName_VersionNumber”

I also create a fake build in TFS for some of my projects with the associated link, so we can still make use of the work item and test case links back to builds (RE: https://github.com/JakeGinnivan/TfsBuildResultPublisher)

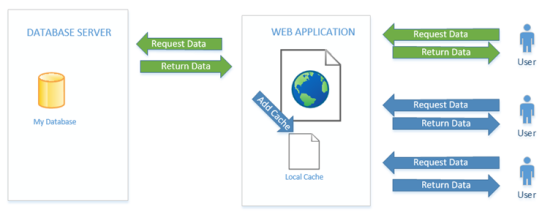

Octopus Feeds off a nuget repo, so the next step is to get your packages into a nuget feed. Octopus has a nuget feed built in but you can run your own as well. You cant use the octopus nuget feed for anything else though, so if you are pushing shared private libraries and need a target (like i do) then you’ll need to get another nuget solution.

The first step though is to get you Visual Studio Projects outputting the nuget packages, to do this add octopack to them

https://www.nuget.org/packages/OctoPack/

Once this is done you’ll get the build output you need and can add a “NuGet Publish” step into team city to push up the output

They will output to a sub folder so I usual use the following Packages location in the step to avoid it getting unwanted packages.

**\octopacked\*.nupkg

Then you can drop in your API key from your octopus user (http://docs.octopusdeploy.com/display/OD/How+to+create+an+API+key) and then the package source set to https://MYOCTOPUSSERVER/nuget/packages

Then for the next step in TeamCity you’ll need the plugin installed (http://docs.octopusdeploy.com/display/OD/TeamCity) this will give you the option for a “OctopusDeploy: Create Release” step that you can run once the nuget packages are published.

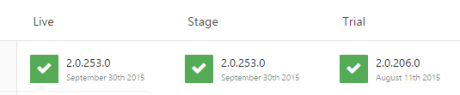

From this step you can configure things like an initial environment to auto Deploy to, etc. its very well done.

If you read my previous post about adding release notes you will understand the last variable in that screen shot. I put html content with hyperlinks back into TFS Web Interface (work items and Change sets) into the octopus releases notes for easy track back.

Once you’ve got all this up you’ll need to configure octopus.

I normally match my solutions in VS to projects in octopus one for one, then create step in octopus for each deploy-able project (e.g. each web site, web service, windows service, etc.),

Each visual studio output projects is configured as a “Deploy a nuget Package” Step

After creating it go down to the features to see the available options

The IIS Support is good but not perfect (doesn’t support sub folder at this time), some of my web services deploy to version numbered sub-folders (e.g. https://mysite.com/v1.5/ ), so we had to roll our own deployment scripts for this

The XML transform and substitution is great.

I’m not a fan of the red gate database stuff, but each to his own.

Windows service, is a windows service.

Once this is configured you can drop a few tentacles on servers broken up into environments (dev/live/etc) and away you go.

I’ll get into the detail of the tentacles and how I deploy them in other posts, there a lot of options here, including good integration with Azure VMs. But skies the limit from here really, the octopus/tentacle combo gives you some great flexibility and only has a few holes which i’ve got some advice on for future posts