A while back i setup a Klondike server for hosting our internal nuget packages. We use it for both internal libraries and octopus.

Microsoft recently released the Package Management feature for VSTS (Formerly know as VSO), the exciting thing about Package Management is that they have hinted they will include support for npm and bower in future, so you will have a single source for all your package management.

After Installing in VSTS you will get a new “Package” option in the top bar.

From here you can create new feeds. In my case I’ve decide to break up my feeds to one per project, but you could easily create more per project if you had for example separate responsibilities where you wanted to have more granular permissions. You can restrict the Publish and Read rights to the feeds to users OR groups within VSTS so its very easy to manage, unlike my hack around for permissions in my previous post about Klondike.

Now because we use TeamCity I have considered creating the build service their own Account in VSTS as they need credentials, but in this example I’m just using my own account.

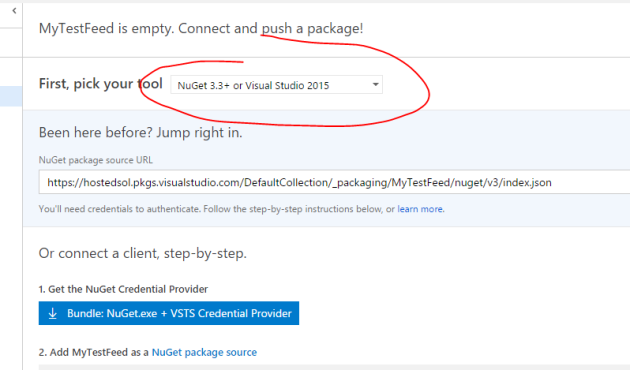

You will need to change the “pick your tool” option to nuget 2.x to get your credentials to use in the TeamCity Steps.

Then click “Generate nuget Credentials” and grab the username and password out.

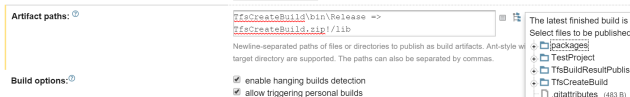

Next hop over to your TeamCity Server, and edit/add your build configuration.

It’s important to note that you will require at least TeamCity version 9.1.6 to do this, as there is a fix in here for nuget credentials.

First jump into “Build Features”, and add a set of nuget credetails with the URL of your feed that you got from the VSTS interface.

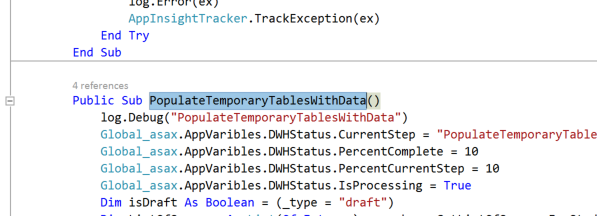

Then jump over to your Build steps and edit/add your nuget steps. Below is an example of my publish step.

The API key I’ve set to “VSTS” as per the instructions in the web interface of VSTS.

And we are publishing.

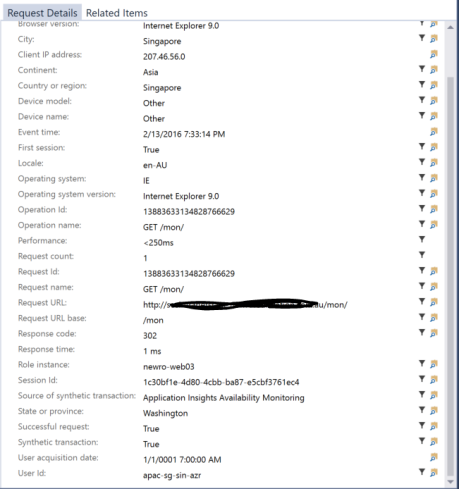

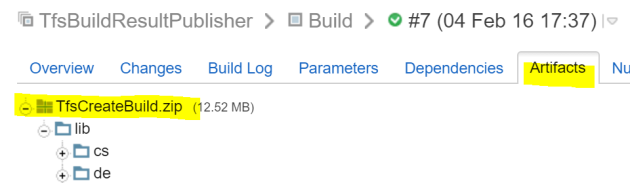

You will see the built packages in the VSTS interface when you are done.

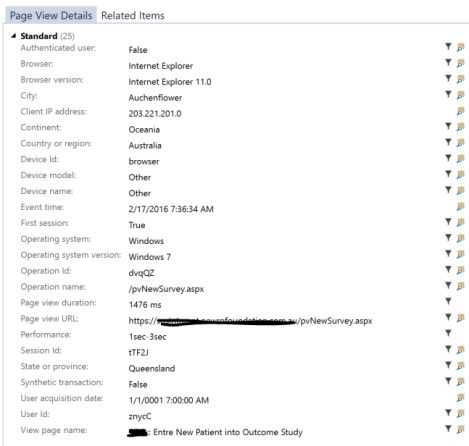

Now if you have an Octopus server like us you will need to add the credentials into it as well into the nuget feeds section.

And its that easy.

One of our concerns about the Klondike server we setup was capacity. Because we have more than 12 developers and run CI with auto deployment to development environment, we are generating a large number of packages daily as developers check-in/commit work, so over a period of months and years the server has become quite bloated, though to give it credit i am surprised at how long it took to get bloated.

Some queries are taking upwards of 15-20 seconds at times and we have an issue (which I have not confirmed is related) where packages are randomly “not there” after the build log say they have been successfully published.

I am hoping that the VSTS platform will do us for longer, and it has the added advantage of the granular permissions which we will be taking advantage of as we grow.