One of the best things about swagger is being able to generate a client. For me swagger is for REST what WSDL was for SOAP, one of my big dislikes about REST from the start was it was hard to build clients because the standard was so lose, and most services if you got one letter’s casing wrong in a large object it would give you a generic 400 response with no clue as to what the actual problem might be.

Enter Swagger-codegen, Java based command line app for generating proxy clients based on the swagger standard. Awesomesuace! However I’m a .NET developer and I try to avoid adding new dependencies into my development environment (Like J2SE), that’s ok though, they have a REST API you can use to generate the clients as well.

In working on this though I found that MS is also working on their own version of codegen, called AutoRest. AutoRest only support 3 output formats at the moment though, Ruby, Node.js (TypeScript) and C#, But looking at the output from both and comparing them, I am much happier with the AutoRest outputted code, its a lot cleaner.

So in our case we have 3 client requirements C#, Client Side javascript, and Client Side Typescript.

Now either way you go with this, one requirement is you need to be able to “run” your WebAPI service on a web server to generate the json swagger file that will be used in the client code generation. So you could add it into a CI pipeline with your Web API but you would need to do build steps like

- Build WebAPI project

- Deploy Web API project to Dev server

- Download json file from Dev Server

- Build client

Or you could make a separate build that you run, I’ve tried both ways and it works fine.

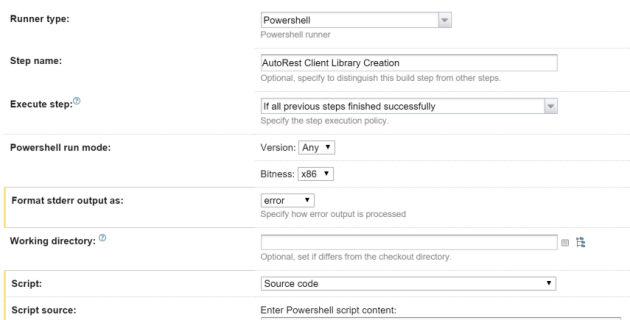

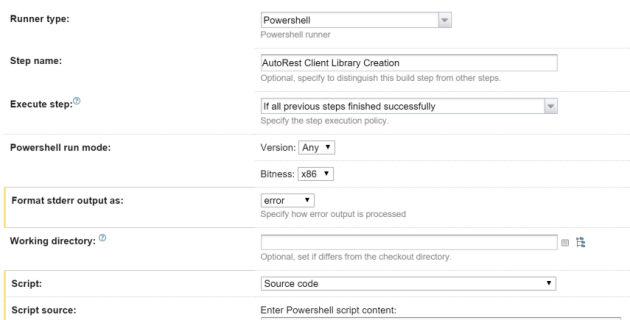

So we decided to use AutoRest for the C# client. This was pretty straight forward, the autorest exe if available in a nuget package. So for our WebAPI project we simply added this, which made it available and build time. Then it was simply a matter of adding a PowerShell step into TeamCity for the client library creation. AutoRest will output a bunch of C# cs file that you will need to compile, which is simply a mater of using the csc.exe, after this I copy over a nupsec file that i have pre-baked for the client library.

.\Packages\autorest.0.13.0\tools\AutoRest.exe -OutputDirectory GeneratedCSharp -Namespace MyWebAPI -Input http://MyWebAPI.net/swagger/docs/v1 -AddCredentials

& "C:\Program Files (x86)\MSBuild\14.0\bin\csc.exe" /out:GeneratedCSharp\MyWebAPI.Client.dll /reference:Packages\Newtonsoft.Json.6.0.4\lib\net45\Newtonsoft.Json.dll /reference:Packages\Microsoft.Rest.ClientRuntime.1.8.2\lib\net45\Microsoft.Rest.ClientRuntime.dll /recurse:GeneratedCSharp\*.cs /reference:System.Net.Http.dll /target:library

xcopy MyWebAPI\ClientNuspecs\CSharp\MyWebAPI.Client.nuspec GeneratedCSharp

You will note form the above command lines for csc that I have had to add in some references to get it to compile, these need to go into your nuspec file as well, so people installing your client package will have the correct dependencies. Snip from my nuspec file below:

<frameworkAssemblies>

<frameworkAssembly assemblyName="System.Net.Http" targetFramework="net45" />

</frameworkAssemblies>

<dependencies>

<dependency id="Microsoft.Rest.ClientRuntime" version="1.8.2" />

<dependency id="Newtonsoft.Json" version="6.0.8" />

</dependencies>

After this just add a Nuget Publish step and you can start pushing your library to nuget.org, or in out case just our private internal server.

For authentication we use Basic Auth over SSL, so adding the “-AddCredentials” command line parameter is needed to generate the extra methods and properties for us, you may or may not need this.

Below is an example console app where I have installed the nuget package that autorest created, this uses basic auth which you my not need.

namespace ConsoleApplication1

{

class Program

{

static void Main(string[] args)

{

var svc = new MyClient();

svc.BaseUri = new Uri("https://MyWebAPILive.com");

svc.Credentials= new BasicAuthenticationCredentials{UserName = "MyUser",Password = "MyPassword!"};

Console.WriteLine(svc.HelloWorld());

Console.ReadLine();

}

}

}

Next we have swagger codegen for our Client libraries. As I said before I don’t want to add J2SE into our build environment to avoid complexity, so we are using the API. I’ve built a gulp job to do this.

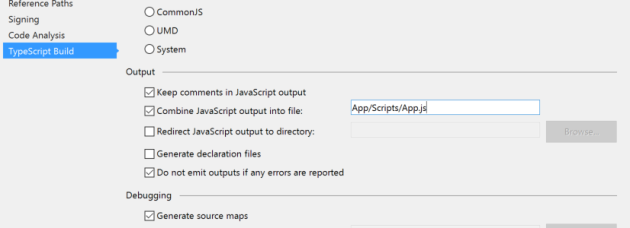

Why gulp? the javascript client output from codegen is pretty rubbish, so instead of using this I’m getting the typescript library and compile it, then minify, i find this easier to do in gulp.

The Swagger UI for the Swagger Codegen api is here. When you call the POST /gen/clients method you pass in your json file, after this it returns a URL back that you can use to then download a zip file with the client package. Below is my gulpfile

var gulp = require('gulp');

var fs = require('fs');

var request = require('request');

var concat = require('gulp-concat');

var unzip = require('gulp-unzip');

var ts = require('gulp-typescript');

var tsd = require('gulp-tsd');

var tempFolder = 'temp';

gulp.task('default', ['ProcessJSONFile'], function () {

// doco https://generator.swagger.io/#/

});

gulp.task('ProcessJSONFile', function (callback) {

return request('http://MyWebAPI.net/swagger/docs/v1',

function (error, response, body) {

if (error != null) {

console.log(error);

return;

}

ProcessJSONFileSwagOnline(body);

});

});

function ProcessJSONFileSwagOnline(bodyData) {

bodyData = "{\"spec\":" + bodyData + "}"; // Swagger code Gen web API requires the output be wrapped in another object

return request({

method: 'POST',

uri: 'http://generator.swagger.io/api/gen/clients/typescript-angular',

body: bodyData,

headers: {

"content-type": "application/json"

}

},

function (error, response, body) {

if (error) {

console.log(error);

return console.error('upload failed:', error);

}

var responseData = JSON.parse(body);

var Url = responseData.link;

console.log(Url);

downloadPackage(Url);

});

};

function downloadPackage(Url) {

return request(Url,

function(error, response, body) {

console.log(error);

}).pipe(fs.createWriteStream('client.zip'), setTimeout(exctractPackage,2000));

};

function exctractPackage() {

gulp.src("client.zip")

.pipe(unzip())

.pipe(gulp.dest(tempFolder));

setTimeout(moveFiles,2000);

};

function moveFiles() {

return gulp.src(tempFolder + '/typescript-angular-client/API/Client/*.ts')

.pipe(gulp.dest('generatedTS/'));

};

Now I am no expert at Node.js I’ll be the first to admit, so I’ve added a few work arounds using setTimeout in my script as I could get the async functions to work correctly, if anyone wants to correct me on how these should be done properly please do 🙂

At the end of this you will end up with the type script files in a folder that you can then process into a package. We are still working on a push to GitHub for this so that we can compile a bower package for us, I will make another blog post about this.

In the typescript output there will always be a api.d.ts file that you can reference into your TypeScript project to expose the client. I’ll do another post about how we setup or Dev Environment for compile the TypeScript from bower packages.

for our Javascript library we just need to add one more step.

function compileTypeScriptClientLib() {

var sourceFiles = [tempFolder + '/**/*.ts'];

gulp.src(sourceFiles)

.pipe(ts({

out: 'clientProxy.js'

}))

.pipe(gulp.dest('outputtedJS/'));

};

This will compile us our JS script library, we can then also minify it in gulp as well, before packaging, again bower is the technology for distributing client packages, so after this we push to GitHub, but i’ll do another blog post about that.

The output you get from TypeScript in CodeGen is angularJS, which is fine as “most” of our apps use angular already, however a couple of our legacy ones don’t, so the client proxy object that is created needs a bit of work to inject it’s dependencies.

Below is an example of a module in javascript that I use to wrap the AngularJS service and return it as a javascipt object with the Angular Dependencies injected:

var apiClient = (function (global) {

var ClientProxyMod= angular.module("ClientProxyMod", []);

ClientProxyMod.value("basePath", "http://MyWebAPILive.com/"); // normally I'd have a settings.js file where I would store this

ClientProxyMod.service("MyWebAPIController1", ['$http', '$httpParamSerializer', 'basePath', API.Client.MyWebAPIController1]);

var prx = angular.injector(['ng', 'ClientProxyMod']).get('MyWebAPIController1');

return {

proxy: prx

}

}());

You would need to do the above once for each controller you have in your WebAPI project, the codegen outputs one service for each controller.

One of the dependencies of the Service that is created by CodeGen is the “basePath” this is the URL to the live service, so i pass this in as a value, you will need to add this value to your Angular JS module when using in an Angular JS app as well.

Using basic auth in AngularJS is pretty straight forward because you can set it on the $http object which is exposed as a property on the service.

apiClient.proxy.$http.defaults.headers.common['Authorization'] = "Basic " + btoa(username + ":" + password);

Then you can simply call your methods from this apiClient.proxy object.